In today's rapidly evolving business landscape, staying competitive means harnessing the power of artificial intelligence and machine learning. One remarkable way to achieve this is through knowledge embedding. Imagine having an AI brain that not only comprehends your business operations but also leverages this knowledge to make informed decisions on a day-to-day basis.

This article will explore knowledge base embedding in depth. We'll delve into its practical applications, focusing on business automation and workflow optimization. By the end of this journey, you'll understand how to equip GPT with your company's knowledge, ensuring that it operates as an extension of your top performers. We'll also present a real-world case study on how to automatically draft customer emails based on your company's best practices. Let's embark on this exciting journey into the realm of knowledge embedding and AI-enhanced business operations.

The Power of Knowledge Embedding

Knowledge embedding is a game-changer in the realm of AI. It enables machines to grasp the nuances of intricate data by representing information as vectors. These vectors, sometimes referred to as embeddings, encode the relationships and similarities between data points, allowing AI systems to understand and use this information effectively. In essence, knowledge embedding empowers AI to think contextually and make decisions based on the wealth of knowledge it has absorbed.

The Need for Company-Specific Knowledge

While generic AI models are undoubtedly impressive, they lack the ability to tap into the specialized knowledge that sets your business apart. Enter company-specific knowledge. Your organization's Standard Operating Procedures (SOPs), proprietary data, and unique industry insights can't be easily imparted to a standard AI model. This is where knowledge embedding becomes invaluable. It equips AI with the capacity to grasp and utilize your company's distinct expertise, making it a formidable asset in your daily operations.

The Role of Embedding and Vector Storage

To understand how knowledge embedding works, it's essential to recognize the role of embedding and vector storage. Embedding, in simpler terms, is the representation of data points in relation to each other. Think of it as arranging similar items close together in a multi-dimensional space. However, embedding models go beyond two dimensions; they work with hundreds or even thousands, making them incredibly versatile. These embedding models, such as OpenAI's embeddings, have learned representations of data relationships, like words with similar meanings.

Vector storage, on the other hand, is where these vector representations are stored and retrieved efficiently. Specialized databases, like Pinecone and Chroma, excel in managing and retrieving vector data. The synergy between embedding and vector storage is pivotal in creating an AI system that can answer complex questions and perform intricate tasks.

Understanding Knowledge Embedding

A. What is Embedding?

Simplifying the Concept of Embedding

In the world of AI and machine learning, "embedding" might sound like a complex concept, but at its core, it's about representing data in a way that captures relationships between different data points. Think of it as creating a map where each data point is like a landmark, and the distances between them on the map reflect their similarities or differences.

Embedding Models and Their Functionality

- Embedding models are essentially tools that help us transform data into these meaningful maps, known as vectors. These vectors are essentially lists of numbers, and they capture various aspects of the data's characteristics.

- For instance, embedding models can take words and convert them into vectors. This means that words with similar meanings will have vectors that are closer together in this high-dimensional space, while words with different meanings will be farther apart.

B. Vector Databases

Now that we understand what embedding is, let's talk about vector databases. These databases are specialized systems designed to store and efficiently retrieve these vectors. They are like the libraries that house all our maps, making them easily accessible for various purposes.

Key Players in Vector Databases (e.g., Pinecone, Chroma)

In the world of vector databases, you have some prominent players like Pinecone and Chroma. These platforms are optimized for managing vector data and conducting operations like similarity searches, which can be incredibly useful in various applications.

C. How Embedding Models and Vector Databases Work Together

So, how do embedding models and vector databases collaborate? Imagine you have a question or a query, and you want to find relevant information from a large dataset. You start by using an embedding model to convert your query into a vector. This vector represents the essence of your question in a high-dimensional space.

Now, you can use a vector database to search for vectors that are similar to your query vector. The database will return data points (or vectors) that are close in this high-dimensional space, effectively giving you results that are relevant to your query.

This combination of embedding models and vector databases is incredibly powerful because it allows you to find similarities or patterns in data that might not be apparent through traditional keyword-based searches. It's like having a super-smart librarian who can instantly find the most relevant information for you, even if you don't know exactly what you're looking for.

D. Role in Enabling AI to Have Domain-Specific Knowledge

The central role of knowledge base embedding is to bridge the gap between general AI capabilities and industry-specific expertise. Traditional AI models lack the ability to comprehend the nuances of a particular domain. Knowledge base embedding addresses this limitation by integrating domain-specific information into the AI model's cognitive framework. This empowers the AI to speak the language of the business, making it a valuable resource for tackling industry-specific challenges.

Example Scenarios Where Knowledge Base Embedding Is Beneficial

- Customer Support: Imagine a scenario where a customer support agent is faced with a complex inquiry related to a company's product. Knowledge base embedding enables AI to instantly retrieve relevant product information, historical customer interactions, and best-practice responses. This not only improves response times but also ensures consistency in customer support.

- Market Research: For businesses conducting market research, having AI equipped with knowledge base embedding can be a game-changer. It can analyze vast amounts of industry-specific data, identify trends, and provide valuable insights for strategic decision-making.

- Compliance and Regulations: Industries like finance and healthcare are bound by strict regulations. Knowledge base embedding ensures that AI models are well-versed in compliance requirements, enabling them to flag potential issues and ensure adherence to guidelines.

- Content Generation: Content marketing is a crucial aspect of modern businesses. With knowledge base embedding, AI can generate content that aligns perfectly with a company's brand and expertise, saving time and maintaining consistency.

Comparison Between Fine-tuning and Knowledge Base Embedding

| Aspect | Fine-Tuning | Knowledge Base Embedding |

| Purpose | Customizing AI models for specific tasks or styles. | Equipping AI with domain-specific knowledge. |

| Data Used | Requires labeled data for specific tasks. | Utilizes an organization's proprietary knowledge base. |

| Scope | Task-specific; narrow focus. | Domain-specific; broader applicability within the organization. |

| Adaptability | Can adapt to new tasks with training data. | Not easily adaptable to new domains without additional data. |

| Response Context | May lack context awareness outside of the fine-tuned task. | Understands context related to the organization's domain. |

| Use Cases | Chatbots, sentiment analysis, text generation for specific purposes. | Customer support, content generation, compliance adherence, market research, and more. |

| Training Complexity | Requires labeled data and fine-tuning effort. | Involves preprocessing and vectorization of knowledge base data. |

| Response Consistency | Consistent within the fine-tuned task. | Ensures consistency in responses based on organizational knowledge. |

| Decision-Making Support | Limited decision-making support; task-specific. | Offers robust decision support based on domain expertise. |

| Integration Complexity | Integration can be complex for new tasks. | Integration complexity lies in preparing and maintaining the knowledge base. |

Knowledge Base Embedding for Business Automation

In today's fast-paced business landscape, knowledge is power. Companies strive to harness their collective wisdom, best practices, and domain-specific expertise to drive efficiency and stay competitive. However, managing and sharing this knowledge can be a daunting task, especially in large organizations. This is where knowledge embedding and leveraging large language models like GPT come into play.

A. The Challenge of Knowledge in Businesses

- The Problem of Tacit Knowledge

One of the fundamental challenges businesses face is the existence of tacit knowledge. Tacit knowledge refers to the valuable insights, experiences, and know-how that reside in the minds of employees but are not explicitly documented. This type of knowledge can be hard to capture, making it susceptible to loss when employees leave or retire. GPT's knowledge embedding capabilities provide a means to capture and share tacit knowledge effectively.

- Inefficiencies in Traditional Knowledge Sharing

Traditional methods of sharing knowledge, such as long documents or manuals, often prove ineffective and impractical. Lengthy documents are rarely read thoroughly, and the information quickly becomes outdated. Moreover, these documents lack the interactivity and real-time applicability needed for today's dynamic business environment. Leveraging large language models like GPT offers a dynamic and responsive alternative.

B. Leveraging Large Language Models

- Automating Responses Based on Knowledge

Large language models like GPT are capable of much more than generating text. They can be trained to understand and leverage existing knowledge within an organization. When a user has a question, instead of sending it to a generic AI model, knowledge embedding comes into play. This process involves searching for relevant documents or data related to the user's query and then feeding both the user's inquiry and the relevant data to a large language model. This approach enables the AI to generate responses based on real data, significantly enhancing the accuracy and relevance of the answers.

- Bridging the Gap between Top Performers and Junior Employees

In many businesses, there's a noticeable disparity in performance between top performers and junior employees. The knowledge and experience of top performers often remain locked within their heads, creating a knowledge gap that can be difficult to bridge. With knowledge base embedding and large language models, it's possible to automate processes based on the best practices of top performers. For instance, in customer support, the AI can analyze past interactions and emulate the behavior of top performers, ensuring consistent and effective responses. This approach democratizes knowledge and helps junior employees perform at a higher level.

Automatically Drafting Customer Emails

In this section, we'll delve into a real-world case study on how to harness the power of knowledge embedding to automatically draft customer emails, effectively transforming your business processes with AI.

A. Preparing Knowledge Base Data

- Structuring Data for Vectorization: Before we can utilize knowledge embedding, it's essential to structure your data in a way that can be easily vectorized. In this case study, we will focus on customer email interactions as our knowledge base.

- Define the format: Start by structuring your data with clear fields for customer messages and corresponding responses from your top salespeople. This structure will enable efficient vectorization.

- Loading and Inspecting the Data: Once your data is structured, the next step is to load and inspect it to ensure everything is in order before proceeding with the embedding process.

- Utilize a CSV loader: We recommend using a CSV loader tool to load your data. Each row in your CSV file represents a pair of customer messages and responses, making it manageable for vectorization.

- Data validation: Inspect the loaded data to confirm that it aligns with your expectations and doesn't contain any anomalies.

B. Vectorizing Data with Embedding Models

- Using OpenAI Text Embedding Models: To make your knowledge base understandable to AI models like GPT-3, you need to convert your text data into a numerical format. OpenAI's text embedding models come to the rescue here.

- Vectorization process: Utilize text embedding models like OpenAI's to convert the loaded text data into numerical vectors. These vectors represent the semantic meaning of the text, enabling machines to understand it.

C. Implementing Similarity Search

- Creating a Function for Similarity Search: Now that your data is vectorized, it's time to implement a similarity search function. This function will enable you to find the most relevant past responses when new customer messages come in.

- Define the similarity metric: Choose an appropriate similarity metric (e.g., cosine similarity) to compare the vectors of new customer messages with those in your knowledge base.

- Create a function: Develop a function that takes a new customer message as input and returns the most similar past responses from your knowledge base.

- Testing the Similarity Search Function: Before proceeding further, thoroughly test your similarity search function to ensure it provides accurate results.

- Input various customer messages: Test the function with a variety of customer messages to confirm that it accurately identifies similar past responses.

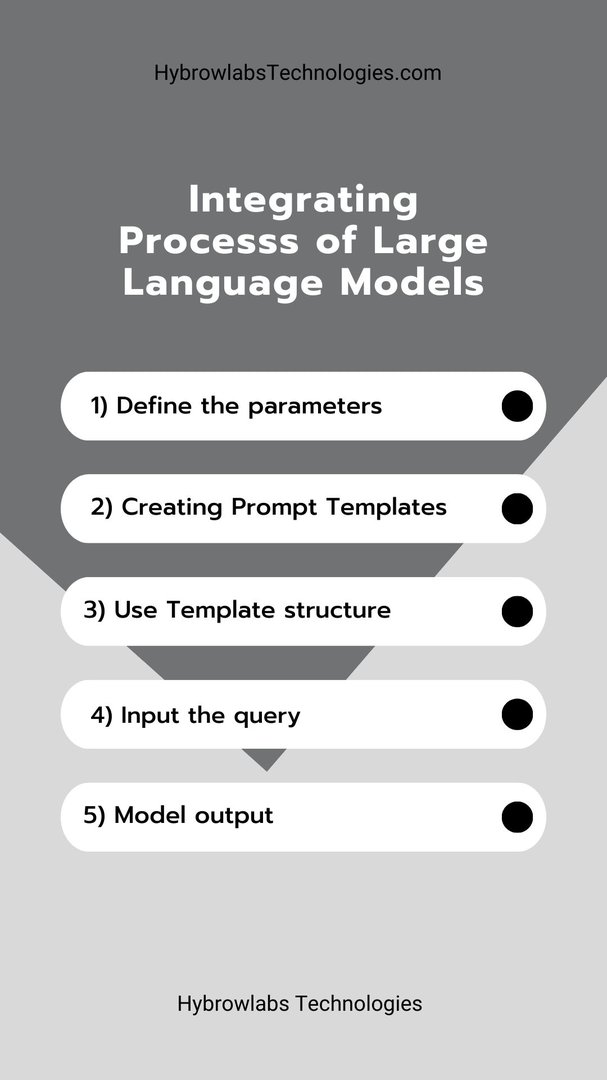

D. Integrating Large Language Models

- Setting up a Large Language Model: In this step, you'll integrate a large language model like GPT-3 into your system to generate responses based on the knowledge retrieved through similarity search.

- Define the parameters: Specify the configuration for your large language model, including the desired temperature and model version (e.g., GPT-3.5).

- Creating Prompt Templates: Craft prompt templates that provide context to the large language model. These templates guide the model in generating responses that align with your business knowledge.

- Template structure: Design templates that introduce the scenario, mention the customer's query, and instruct the model to follow specific guidelines, such as mimicking the style of past responses.

- Generating Responses Based on Knowledge: Once your system is set up, you can now generate responses to new customer messages that are grounded in your business knowledge.

- Input the query: Feed the customer's message and the retrieved past responses into the large language model using your prompt templates.

- Model output: The model will generate a response that adheres to the knowledge embedded in your past interactions, ensuring consistency and accuracy in customer communication.

By following these steps, you can empower your business with the capability to automatically draft customer emails that not only reflect your company's best practices but also bridge the gap between top performers and junior employees. Knowledge embedding, combined with AI models like GPT-3, opens up new avenues for enhancing customer interactions and streamlining business processes.

Real-World Applications

In the rapidly evolving landscape of artificial intelligence (AI) and natural language processing (NLP), knowledge embedding has emerged as a powerful tool for enhancing business insights and decision-making. In this section, we delve into the real-world applications of knowledge base embedding, highlighting its potential to revolutionize how businesses operate, learn, and adapt.

A. Exploring How Knowledge Base Embedding Benefits Businesses

- Enhanced Decision-Making: One of the primary benefits of knowledge base embedding for businesses is its capacity to empower decision-makers with data-driven insights. By embedding domain-specific knowledge into AI systems, organizations can make more informed and strategic decisions. For example, a retail company can embed its historical sales data, enabling AI systems to provide recommendations for optimizing inventory levels.

- Personalized Customer Experiences: Knowledge base embedding can be leveraged to create highly personalized customer experiences. By analyzing past interactions and purchase history, businesses can use AI to offer tailored product recommendations, increasing customer satisfaction and sales.

- Streamlined Content Management: Content-heavy industries like publishing or e-learning can use knowledge embedding to organize and retrieve vast amounts of data efficiently. AI-driven content management systems can automatically categorize, tag, and recommend relevant content, making it easier for users to find the information they need.

- Market Analysis and Trends: Embedding industry-specific knowledge enables AI systems to analyze market trends and competitors' strategies more effectively. Businesses can gain a competitive edge by quickly adapting to changing market conditions and identifying emerging opportunities.

- Efficient Compliance and Risk Management: In sectors subject to strict regulations like finance or healthcare, knowledge base embedding can help ensure compliance. AI systems can scan documents and data for compliance violations, reducing the risk of costly penalties.

B. Overcoming Challenges of Traditional Knowledge Sharing Methods

- Knowledge Silos: In many organizations, knowledge tends to reside in silos, with each department or team hoarding its insights. Knowledge base embedding can break down these silos by centralizing knowledge in a format that is easily accessible and shareable across the entire organization.

- Knowledge Decay: Traditional documents and manuals become quickly outdated, requiring frequent updates. Knowledge base embedding, on the other hand, continuously learns from new data and adapts, ensuring that the knowledge remains relevant and up to date.

- Difficulty in Knowledge Transfer: Transferring knowledge from experienced employees to new hires or junior team members can be challenging. Knowledge base embedding enables businesses to capture the expertise of experienced employees and make it available to everyone, facilitating smoother onboarding and skill development.

- Search and Retrieval Inefficiency: Traditional search engines may struggle to provide accurate results, leading to inefficiencies in finding critical information. Knowledge embedding improves search and retrieval processes by understanding context and relevance, delivering more accurate results.

C. Closing the Gap Between Experienced and Junior Employees

- Mentorship in a Digital Form: Knowledge base embedding offers a unique opportunity to digitize and replicate the expertise of senior employees. For instance, an AI system can analyze the decision-making process of top salespeople and provide real-time guidance to junior sales reps, thus closing the experience gap.

- Consistency in Performance: With AI-driven knowledge embedding, businesses can ensure a consistent level of performance across their workforce. Instead of relying solely on the knowledge of a few experts, the entire team can benefit from the collective wisdom embedded in the system.

- Reduced Reliance on Experts: Organizations often face challenges when key experts leave the company or retire. Knowledge base embedding mitigates this risk by preserving and disseminating their knowledge, reducing the impact of personnel changes.

- Continuous Learning: Junior employees can continuously learn from the best practices of their senior counterparts. AI-powered systems can track the evolution of best practices and adapt, ensuring that employees are always working with the most effective strategies.

D. Use Cases in Automating Customer Interactions and Email Responses

- Efficient Customer Support: Knowledge base embedding revolutionizes customer support by enabling automated responses based on historical interactions and best practices. When a customer submits a query or complaint, AI can instantly search the knowledge base and provide a personalized and informed response, reducing response times and enhancing customer satisfaction.

- Email Responses: In a world where email communication is prevalent, businesses can use knowledge embedding to automate email responses effectively. For example, a sales representative can receive an inquiry from a prospective client, and the AI system can generate a response based on past successful interactions, ensuring a consistent and professional tone.

- Elevating Sales and Marketing: Embedding best practices in sales and marketing efforts can yield significant benefits. AI can analyze successful sales pitches, customer engagement strategies, and marketing campaigns to guide future efforts. This ensures that businesses are continually improving their strategies based on real data and outcomes.

- Customized Product Recommendations: E-commerce platforms can harness the power of knowledge base embedding to offer personalized product recommendations via email. By considering a customer's browsing history, purchase behavior, and preferences, AI can suggest products that align with the customer's interests, increasing the likelihood of conversions.

V. Conclusion

In conclusion, harnessing the power of knowledge embedding to empower your business with AI-driven insights is a transformative journey that can yield substantial benefits. As we wrap up this article, let's address some frequently asked questions (FAQs) about knowledge embedding and its application in giving GPT your business knowledge.

FAQ

FAQ 1: What exactly is knowledge embedding?

Knowledge embedding is a technique used to represent complex data, such as business information, in a structured and understandable manner for AI models.It involves converting textual or numeric data into multi-dimensional vectors that capture relationships and similarities between different data points.

FAQ 2: How can knowledge embedding benefit my business?

Knowledge embedding enables your AI systems, like GPT-3, to have a deep understanding of your company's domain-specific data.

It facilitates quick and accurate retrieval of specific information, improving decision-making processes and customer interactions.

By automating tasks and responses based on your business knowledge, you can enhance efficiency and productivity.

FAQ 3: Are there specialized tools for knowledge embedding?

Yes, there are specialized tools like Pinecone and Chroma that are designed to store and retrieve vectorized data efficiently. These vector databases play a crucial role in knowledge embedding.

FAQ 4: How can knowledge embedding help bridge the gap between experienced and junior employees?

Knowledge embedding can be used to capture and mimic the behavior of top-performing employees.

When junior employees have access to AI-driven responses and actions based on best practices, they can perform at a higher level, leading to more consistent results.

FAQ 5: What are some potential future applications of knowledge embedding in business?

Knowledge embedding can be applied to various business scenarios, such as automating customer support, content generation, and even decision-making processes. It has the potential to revolutionize knowledge management within organizations, making critical data readily available and actionable.

ed0eb2.jpg)

a3dc85.jpg)

.jpg)

fd8f11.png)

.jpg)

.jpg)