Large Language Models (LLMs) have ushered in a new era of software development, offering unparalleled capabilities for natural language processing and understanding. However, harnessing the power of LLMs effectively requires a well-defined architecture, given their unique characteristics. In this article, we delve into the emerging architectures for LLM applications, providing insights into the systems, tools, and design patterns used by AI startups and tech companies. We've drawn inspiration from discussions with industry experts, and we'll also introduce some additional insights and considerations. Also, if you would like to know how to create LLM apps make sure to check our blog.

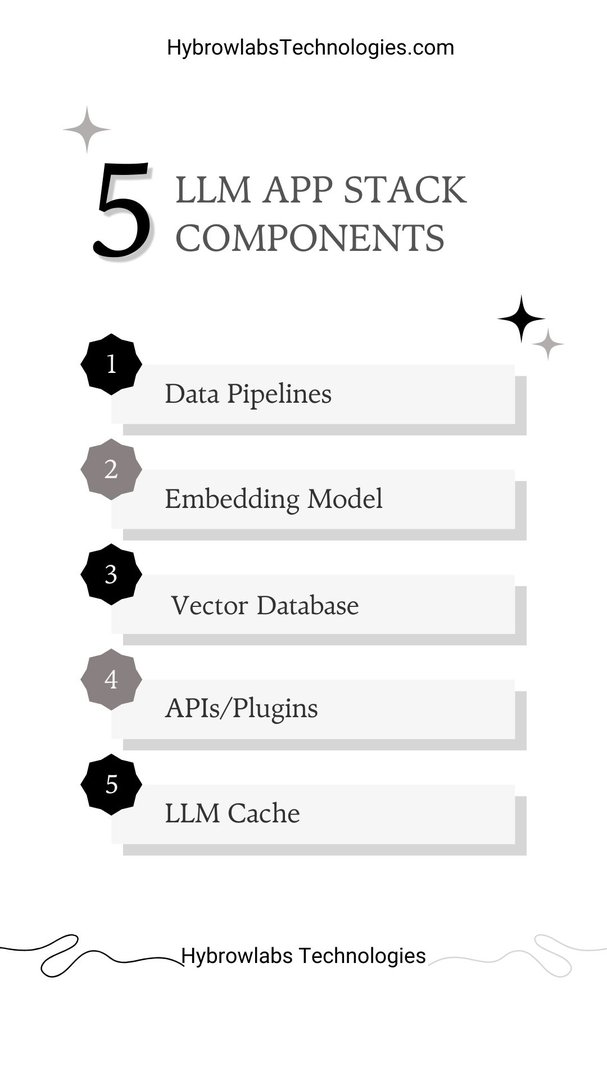

The LLM App Stack

Before we dive into the architecture, let's take a look at the components that constitute the LLM app stack. This stack serves as a foundation for building LLM-powered applications:

- Data Pipelines: Data is the lifeblood of LLM applications. Efficient data pipelines are essential for collecting, preprocessing, and storing textual and structured data.

- Embedding Model: LLMs rely on embedding models to transform raw data into numerical vectors that can be processed effectively.

- Vector Database: A specialized database for storing and retrieving these numerical embeddings, facilitating fast and efficient querying.

- Playground: A development environment where developers can experiment with LLMs and fine-tune their applications.

- Orchestration: Tools for managing the flow of data, prompts, and responses within the LLM application.

- APIs/Plugins: Interfaces that enable interaction with LLMs, external services, and data sources.

- LLM Cache: A caching layer to improve application response times and reduce latency.

- Logging/LLMops: Tools for monitoring, logging, and managing LLM operations.

- Validation: Systems for validating LLM outputs and ensuring data accuracy.

- App Hosting: Infrastructure and platforms for hosting the LLM-powered application.

- LLM APIs (proprietary): Proprietary APIs provided by organizations like OpenAI for accessing LLM models.

- LLM APIs (open): Open-source or community-driven APIs for LLM interaction.

- Cloud Providers: Cloud platforms where LLM applications can be deployed and scaled.

- Opinionated Clouds: Specialized cloud offerings tailored for LLM applications.

This stack forms the backbone of LLM-driven applications and provides the necessary infrastructure for their development and deployment.

In-Context Learning: The Core Design Pattern

One of the foundational design patterns for building with LLMs is "in-context learning." This approach involves using LLMs without extensive fine-tuning and instead controlling their behavior through clever prompts and conditioning on contextual data. Let's explore this pattern in more detail.

In-Context Learning Workflow

The in-context learning workflow can be divided into three key stages:

- Data Preprocessing / Embedding: Private data, such as legal documents in a legal advice application, is broken into chunks, transformed into embeddings, and stored in a vector database.

- Prompt Construction / Retrieval: When a user submits a query, the application constructs prompts for the LLM. These prompts combine developer-defined templates, few-shot examples, data retrieved from external APIs, and relevant documents from the vector database.

- Prompt Execution / Inference: The prompts are sent to a pre-trained LLM for inference. Some developers incorporate operational systems like logging and caching at this stage.

Advantages of In-Context Learning

In-context learning simplifies the process of building LLM applications by reducing the need for extensive fine-tuning. It transforms AI development into a data engineering problem that's accessible to startups and established companies. In-context learning is particularly beneficial for applications with relatively small datasets, as it can adapt to new data in near real time.

However, it's crucial to address a common question: What happens when the underlying model's context window expands? While this is an active area of research, it presents challenges related to cost and time of inference. Even with linear scaling, larger context windows could lead to significant expenses.

To explore in-context learning further, refer to resources in the AI community, especially the "Practical guides to building with LLMs" section.

Handling Contextual Data

Contextual data is a critical component of LLM applications, encompassing text documents, PDFs, structured data like CSV or SQL tables, and more. The way developers handle this data varies, but there are some common approaches:

- Traditional ETL Tools: Many developers use established tools like Databricks or Airflow for data extraction, transformation, and loading.

- Document Loaders: Some frameworks like LangChain and LlamaIndex offer document loading capabilities, simplifying the handling of unstructured data.

- Embedding Models: Developers often utilize the OpenAI API's text-embedding-ada-002 model for transforming textual data into embeddings.

- Alternative Embedding Models: The Sentence Transformers library from Hugging Face provides an open-source alternative for creating embeddings tailored to specific use cases.

- Vector Databases: Efficient storage and retrieval of embeddings are facilitated by vector databases like Pinecone. However, various alternatives, including open-source solutions and OLTP extensions, exist.

Prompts and Interaction with LLMs

Effectively prompting LLMs and incorporating contextual data require thoughtful strategies. While simple prompts may suffice for initial experiments, more advanced techniques become essential for production-quality results. Some strategies include:

- Zero-Shot and Few-Shot Prompting: Initiating LLMs with direct instructions or example outputs.

- Advanced Prompting Strategies: Techniques like chain-of-thought, self-consistency, generated knowledge, and directional stimulus can provide richer interactions.

- Orchestration Frameworks: Tools like LangChain and LlamaIndex simplify prompt chaining, external API interactions, contextual data retrieval, and state management.

- ChatGPT as an Alternative: While typically considered an application, ChatGPT's API can serve similar orchestration functions, offering a more straightforward approach to prompt construction.

LLM Model Selection

Choosing the right LLM model is crucial for application performance. OpenAI, with models like gpt-4 and gpt-4-32k, is a popular starting point. However, as applications scale, developers explore different options:

- Cost-Effective Models: Switching to models like gpt-3.5-turbo, which offer lower cost and faster inference, while still maintaining reasonable accuracy.

- Exploring Proprietary Vendors: Exploring models from vendors like Anthropic, which provide customization options, faster inference, and larger context windows.

- Incorporating Open Source Models: Utilizing open-source LLMs, especially when fine-tuning is necessary, in conjunction with platforms like Databricks, Anyscale, Mosaic, Modal, and RunPod.

- Operational Tooling: Implementing operational tools like caching, logging, and model validation for enhanced performance and reliability.

Infrastructure and Hosting

LLM applications also require infrastructure and hosting solutions:

- Standard Options: Traditional hosting platforms like Vercel and major cloud providers are commonly used for deploying LLM-powered applications.

- End-to-End Hosting: Startups like Steamship provide comprehensive hosting solutions that include orchestration, data context management, and more.

- Model and Code Hosting: Platforms like Anyscale and Modal allow developers to host both models and Python code in a unified environment.

The Role of AI Agents

While not explicitly part of the reference architecture, AI agents play a pivotal role in many LLM applications. These agents, like AutoGPT, have the potential to bring advanced reasoning, tool usage, and learning capabilities to LLM-powered apps. However, they are still in the experimental phase, with challenges related to reliability and task completion.

Conclusion

The emergence of pre-trained AI models, particularly LLMs, has revolutionized software development. The architectures and patterns outlined in this article are just the beginning. As the field evolves, we can expect to see changes, especially as the context window of models expands and AI agents become more sophisticated.

Pre-trained AI models have democratized AI development, enabling individual developers to create powerful applications quickly. As technology continues to advance, these architectures will evolve, and new reference architectures will emerge to address changing requirements.

If you have feedback or suggestions regarding this article, please reach out. Also you can also check our blog on LLMs vs LangChain. The world of LLM applications is dynamic and constantly evolving, and collaboration and knowledge-sharing are essential for its continued growth and innovation.

FAQ

1. What are LLM applications, and why are they considered 'emerging' in the field of software development?

LLM applications are those powered by Large Language Models. They are considered emerging because they represent a relatively new and transformative approach to building software, especially in terms of natural language understanding and processing.

2. What is the core design pattern of 'in-context learning,' and how does it simplify the development of LLM applications?

In-context learning involves using LLMs off the shelf and controlling their behavior through clever prompts and conditioning on contextual data. It simplifies development by reducing the need for extensive fine-tuning and making AI development accessible to a wider range of developers.

3. What are the essential components of the LLM app stack, and why are they important in building LLM-powered applications?

The LLM app stack comprises various components, including data pipelines, embedding models, vector databases, orchestration tools, and more. These components are vital as they provide the infrastructure and tools necessary for collecting, processing, and interacting with LLMs effectively.

4. How can developers effectively handle contextual data in LLM applications, and what tools and approaches are commonly used for this purpose?

Contextual data, such as text documents and structured formats, is crucial in LLM applications. Developers typically use tools like Databricks, Airflow, and vector databases like Pinecone to handle this data efficiently.

5. What considerations should developers keep in mind when selecting LLM models for their applications, and how can they optimize model performance?

When selecting LLM models, developers should consider factors like cost, inference speed, and context window size. Optimizations may include switching to cost-effective models, exploring proprietary vendors, and implementing operational tools like caching and logging for enhanced performance and reliability.

.jpg)

a3dc85.jpg)

.jpg)

fd8f11.png)

.jpg)

.jpg)